Introduction

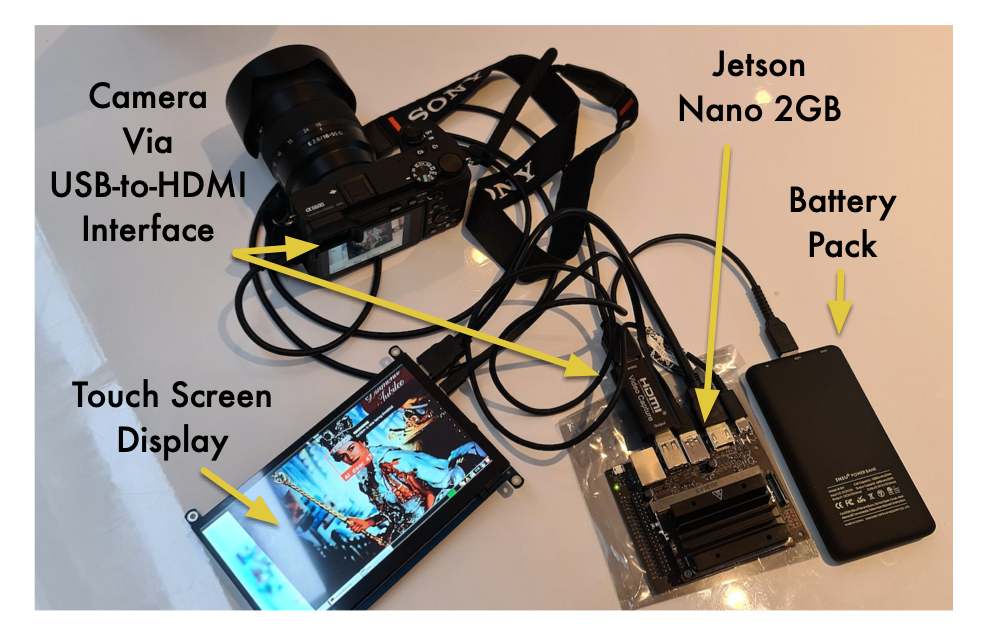

The NVIDIA Jetson Nano is a compact, energy-efficient single-board computer designed for artificial intelligence (AI) and machine learning (ML) applications at the edge. Equipped with a 128-core NVIDIA Maxwell GPU, a quad-core ARM Cortex-A57 CPU, and 4 GB of RAM, it offers powerful parallel computing performance in a small form factor. The Jetson Nano runs Linux (via NVIDIA’s JetPack SDK) and supports frameworks like TensorFlow, PyTorch, Caffe, and MXNet, making it ideal for AI developers, students, and hobbyists building computer vision, robotics, and deep learning projects.

What sets the Jetson Nano apart from other small computers is its ability to handle demanding AI workloads such as image classification, object detection, and speech processing in real time—all while consuming only 5 to 10 watts of power. It provides GPIO, I²C, SPI, and UART pins for hardware interfacing, as well as USB, HDMI, and camera interfaces, giving it flexibility for robotics, drones, smart cameras, and autonomous devices. With its strong community support and developer resources, the Jetson Nano serves as both an educational tool for learning AI and a practical platform for prototyping production-grade AI systems.

jetson Software Architecture

NVIDIA Jetson software is the most advanced AI software stack yet, purpose-built for the next era of edge computing, where physical AI, generative models, and real-time intelligence converge. At the highest level, Jetson software is optimized for humanoid robotics and machines that interact dynamically with the physical world. It is fully ready for generative AI, enabling developers to deploy large language models (LLM), diffusion models, and vision-language models (VLM) directly at the edge. Jetson software supports the entire developer journey—from rapid prototyping to robust production deployment—ensuring a seamless path from innovation to market.

At the platform level, NVIDIA JetPack provides support for any generative AI model. JetPack is tuned to meet the latency and determinism requirements of real-time applications such as robotics, medical imaging, and industrial automation. It leverages the full power of the NVIDIA AI stack—from the cloud to the edge—with microservices and support for agentic workflows that simplify integration of perception, planning, and control in intelligent systems.

At its core, Jetson software includes the latest JetPack SDK. JetPack is built on a modern foundation with a redesigned compute stack featuring Linux kernel 6.8 and Jetson Linux, which is derived from Ubuntu 24.04 LTS. It introduces several critical technical advancements: The Holoscan Sensor Bridge enables flexible, high-throughput sensor data integration; Multi-Instance GPU (MIG) support brings resource partitioning to Jetson, allowing concurrent and isolated workloads; and the inclusion of a PREEMPT_RT kernel unlocks true deterministic performance for mission-critical, real-time use cases. JetPack also includes support for Jetson Platform Services (JPS) and is architected for the next-generation platform—Jetson Thor.

JetPack sets a new standard for edge AI, combining support for generative AI, real-time performance, and a rich ecosystem to accelerate development. It’s the fastest path to building intelligent, responsive systems across humanoids, robotics, healthcare, and industrial automation.

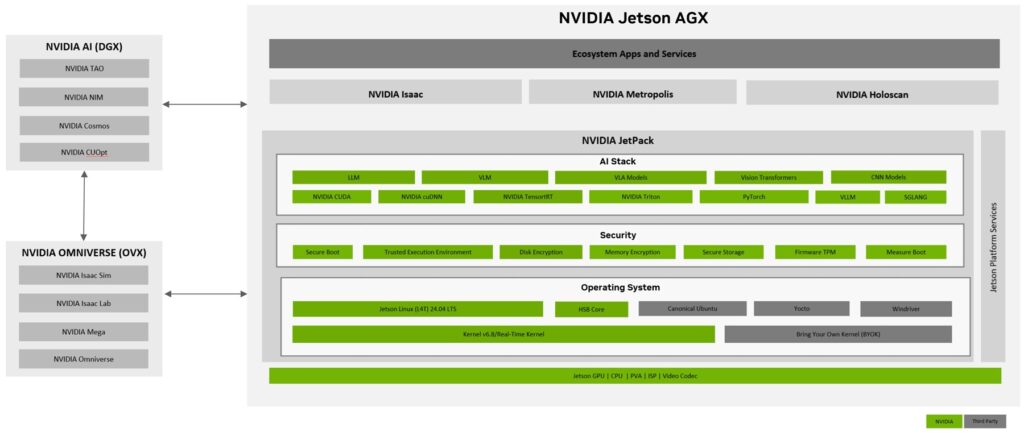

The following diagram illustrates the full Jetson software stack. Built on the Jetson GPU, the stack includes the JetPack layer and reference frameworks, with developer applications sitting at the top. This layered architecture enables developers to easily build applications using the components provided. The Jetson software stack also offers seamless integration with AI training systems and Omniverse simulation platforms.

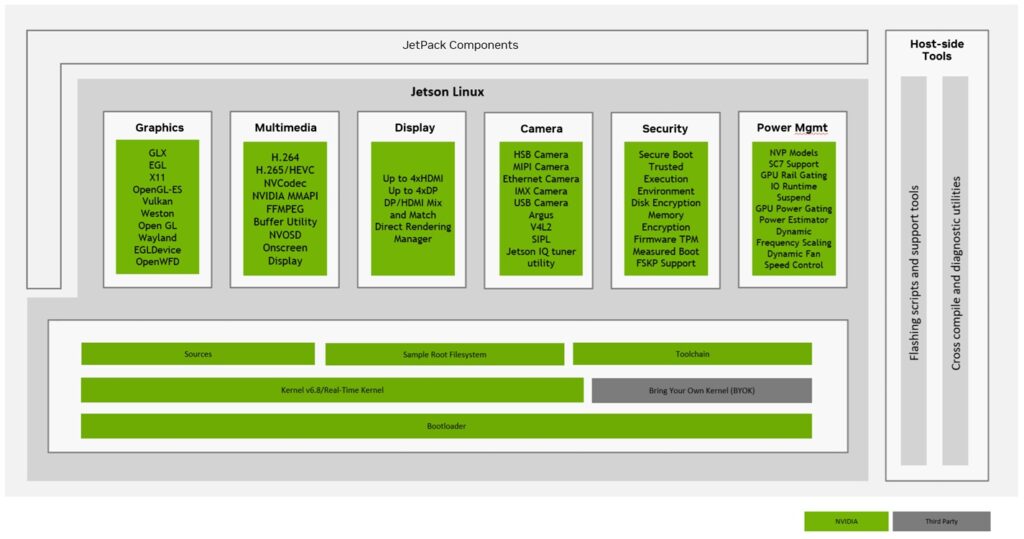

The following diagram illustrates a more detailed structure of the Jetson Linux stack. The stack provides a board support package (BSP) and hosts multiple modules from NVIDIA, community, and third-party libraries. NVIDIA also provides an expansive set of host-side tools.

At the core of the stack lies the BSP, which includes the kernel, bootloader, sample root filesystem, toolchain, and sources, enabling full customization and low-level development. Atop the BSP lies critical software blocks across domains such as graphics (like Vulkan, OpenGL, and Wayland), multimedia (like NVCodec and FFMPEG), display (supporting HDMI, DP, and direct rendering), camera frameworks (like V4L2 and Argus), security features (like secure boot, TPM, and encryption),and power management (like SC7, rail gating, and dynamic scaling). These modules ensure robust support for high-performance, power-efficient embedded AI applications.

The entire stack is complemented by a suite of host tools for cross-compilation, diagnostics, and device flashing, providing a complete, end-to-end development environment for edge AI solutions.

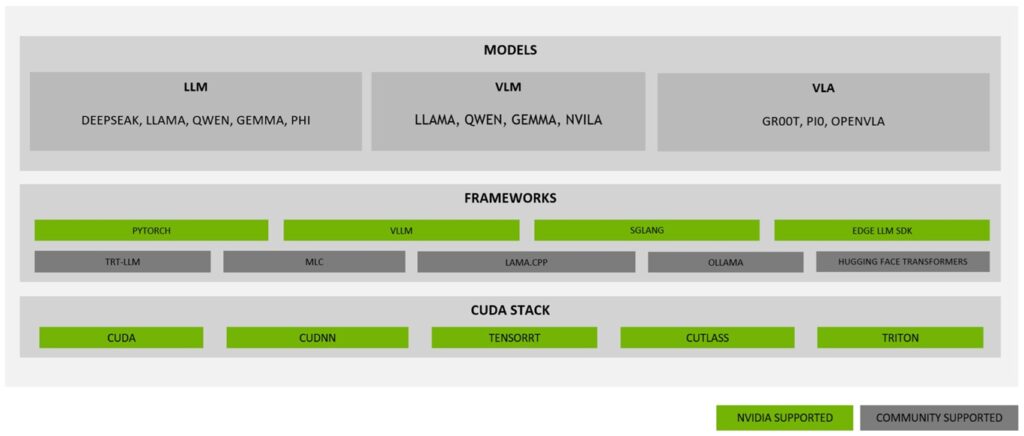

Above the Jetson Linux stack lies the NVIDIA AI Compute Stack. The stack begins with the CUDA layer, which comprises the latest CUDA-x libraries, including CUDA, cuDNN, and TensorRT. The Frameworks layer above the CUDA layer supports several NVIDIA and community-supported AI frameworks. The Jetson Thor software stack supports all popular AI frameworks. This group includes frameworks officially supported by NVIDIA, like NVIDIA TensorRT, PyTorch, vLLM, and SGLang, which are provided with regularly updated wheels and containers through the NVIDIA GPU Cloud (NGC). Additionally, the stack offers community-driven support for popular projects such as llama.cpp, MLC, JAX, and Hugging Face Transformers, with NVIDIA releasing the latest containers for these frameworks on Jetson through Jetson AI Lab.

Jetson Thor can seamlessly run state-of-the-art architectures across LLMs, VLMs, and vision language action models (VLAs). All popular models—including DeepSeek, Llama, Qwen, Gemma, Mistral, Phi, and Physical Intelligence—are accelerated on Jetson Thor.

what is this capable of

The NVIDIA Jetson Nano is a powerful single-board computer designed for AI, robotics, and edge computing projects, capable of handling tasks that go far beyond what a standard Raspberry Pi can do. With its 128-core Maxwell GPU, quad-core ARM Cortex-A57 CPU, and 4 GB of RAM, it delivers up to 472 GFLOPS of performance while consuming just 5 to 10 watts of power. Developers use it to build real-time AI systems such as smart cameras for object detection, autonomous robots for navigation, drones with onboard vision processing, and voice assistants with local speech recognition. It supports popular AI frameworks like TensorFlow, PyTorch, and ONNX, and integrates seamlessly with the Robot Operating System (ROS) for robotics applications. Featuring GPIO, I²C, SPI, USB, HDMI, and MIPI CSI camera interfaces, the Jetson Nano is highly flexible for connecting sensors, motors, and other peripherals. Its ability to run complex deep learning models at the edge makes it ideal for low-latency, privacy-focused projects, and any code developed on the Nano can be easily scaled to more powerful Jetson boards like the Xavier NX or Orin for production use.