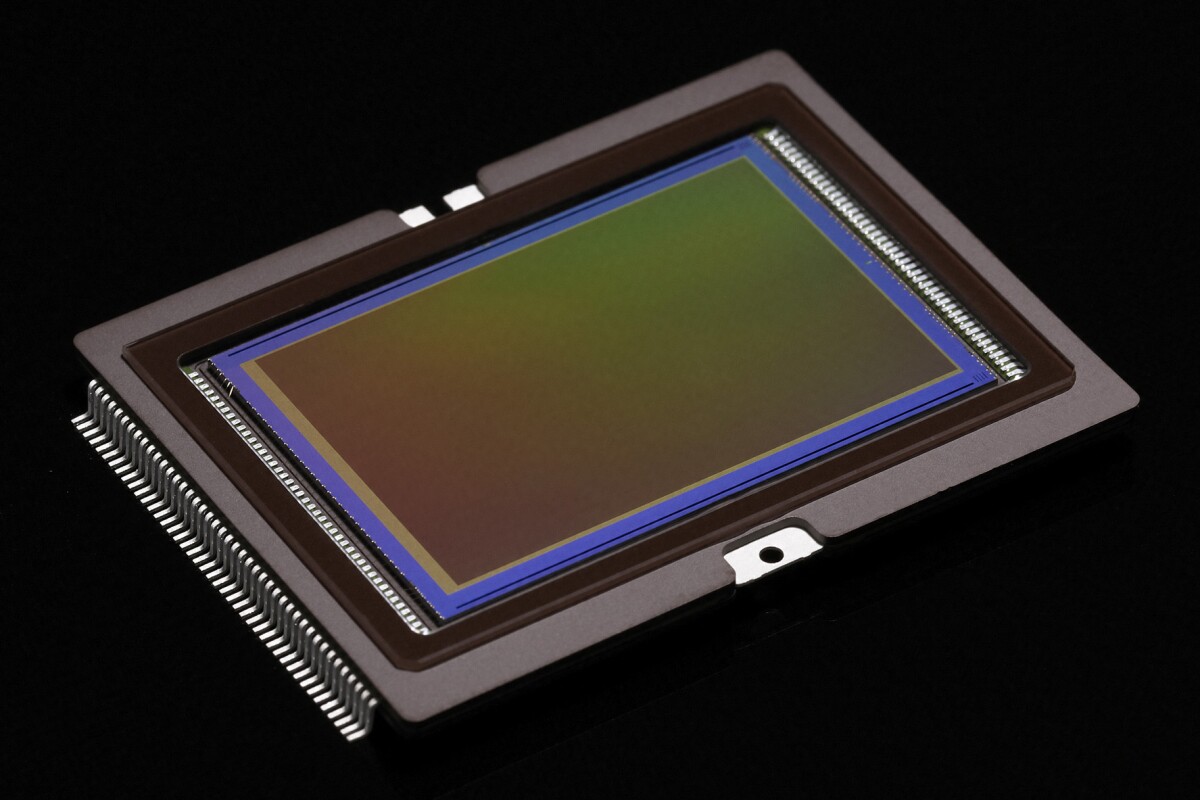

Image sensors are at the heart of modern digital imaging technology. They are the key components that convert light into electrical signals, enabling devices like cameras, smartphones, medical equipment, and even autonomous vehicles to “see” the world. From capturing everyday moments to powering advanced machine vision systems, image sensors play a vital role in bridging the gap between the physical and digital worlds.

There are different types of image sensors, such as CCD (Charge-Coupled Device) and CMOS (Complementary Metal-Oxide-Semiconductor), each offering unique advantages in terms of speed, sensitivity, and power efficiency. The continuous evolution of image sensor technology has led to higher resolutions, better low-light performance, and integration with AI for smarter imaging solutions.

Whether it’s photography, security, robotics, or industrial automation, image sensors are the invisible eyes of technology, making them one of the most impactful innovations in electronics and optics.

The History of Image Sensors

Image sensors are the foundation of modern digital imaging, enabling cameras, smartphones, medical equipment, and countless other devices to capture and process visual information. But this technology did not appear overnight—it has a long and fascinating history rooted in both physics and electronics. From early experiments with light-sensitive materials to today’s highly advanced CMOS and CCD devices, the story of image sensors is a journey of scientific discovery, innovation, and continuous improvement.

Early Concepts of Capturing Light (1800s)

The roots of image sensors can be traced back to the 19th century, when scientists first began experimenting with light-sensitive materials. In 1839, Louis Daguerre introduced the daguerreotype process, an early photographic method that used silver iodide on a copper plate to capture an image. Although this was chemical photography, not electronic, it set the stage for future innovations in light detection.

Another milestone was the invention of the selenium photocell in the 1870s. Selenium has the ability to change its electrical resistance when exposed to light, making it one of the first materials to demonstrate a photoelectric effect. This principle became a stepping stone for the idea of converting light into electrical signals, which is the foundation of modern image sensors.

The Discovery of the Photoelectric Effect (1905)

In 1905, Albert Einstein published his groundbreaking paper on the photoelectric effect, explaining how light could knock electrons out of a material. This discovery not only won him the Nobel Prize in Physics (1921) but also laid the theoretical groundwork for all light-detecting technologies.

Einstein’s work inspired researchers to explore ways of building devices that could directly convert light into measurable electrical signals. This concept marked the true beginning of electronic imaging.

The First Electronic Television Cameras (1920s–1930s)

The next major leap came with the invention of television. In the 1920s and 1930s, inventors sought ways to electronically capture and transmit moving images.

- In 1923, Vladimir Zworykin developed the iconoscope, one of the first practical electronic cameras. The iconoscope used a photosensitive plate to capture an image and convert it into an electrical signal.

- Around the same time, Philo Farnsworth created an image dissector tube that scanned images electronically.

These technologies were bulky and limited in quality, but they represented the earliest forms of image sensors, as they converted light into electrical signals for visual reproduction.

Development of Solid-State Electronics (1950s–1960s)

The invention of the transistor in 1947 and the rise of solid-state electronics opened new doors for image sensor development. Instead of relying on vacuum tubes like the iconoscope, researchers started investigating semiconductor materials like silicon, which could be miniaturized and made more efficient.

During the 1950s and 1960s, scientists worked on experimental photodiodes and phototransistors, which could detect light at specific points. However, building a complete two-dimensional image sensor required arranging these elements into an array—a challenge that would be solved in the decades ahead.

The Invention of the CCD (1969)

A groundbreaking moment came in 1969 when Willard Boyle and George E. Smith, working at Bell Labs, invented the Charge-Coupled Device (CCD). The CCD was revolutionary because it could store and transfer electrical charges generated by light hitting a semiconductor surface, moving them across the chip for readout.

The CCD quickly proved to be an excellent technology for capturing images:

- It offered high sensitivity to light.

- It produced low noise compared to earlier sensors.

- It could be built into arrays to create complete digital images.

Boyle and Smith’s work earned them the 2009 Nobel Prize in Physics, and CCDs went on to dominate digital imaging for decades, especially in scientific, medical, and professional photography applications.

The Rise of CMOS Image Sensors (1970s–1990s)

While CCDs were gaining momentum, researchers also explored another technology: the Complementary Metal-Oxide-Semiconductor (CMOS) image sensor.

CMOS sensors were first conceptualized in the 1970s, but early designs suffered from high noise and lower image quality compared to CCDs. As a result, CCDs became the standard for most imaging devices through the 1980s and 1990s.

However, CMOS technology had one key advantage: it could integrate imaging functions and processing circuits onto the same chip, making it cheaper and more power-efficient. By the 1990s, advancements in semiconductor fabrication significantly improved CMOS sensor performance, paving the way for their widespread adoption.

The Digital Camera Revolution (1990s–2000s)

The late 20th century witnessed a major transformation in photography and consumer electronics. With CCDs and later CMOS sensors, the digital camera revolution began:

- In 1981, Sony introduced the Mavica, an electronic still camera using a CCD.

- By the 1990s, digital cameras became increasingly popular, replacing film-based cameras in consumer markets.

- In the 2000s, CMOS sensors matured and overtook CCDs in consumer devices like smartphones due to their lower cost, lower power use, and faster readout speeds.

This period marked the moment when image sensors transitioned from specialized scientific tools to everyday consumer products.

Image Sensors in the Smartphone Era (2007–Present)

The launch of the Apple iPhone in 2007 was a turning point for image sensor technology. Smartphones demanded small, efficient, and high-quality sensors that could fit into compact designs. CMOS sensors, with their scalability and low power requirements, became the standard.

Since then, smartphone makers have pushed sensor innovation further with:

- Backside-illuminated (BSI) CMOS sensors for improved low-light performance.

- Stacked sensors that separate the pixel and logic layers for faster processing.

- High-resolution sensors exceeding 100 megapixels.

- AI-powered image processing integrated directly into sensor systems.

Today, image sensors are not just about capturing pictures—they are central to applications like augmented reality (AR), facial recognition, medical imaging, autonomous vehicles, and robotics.

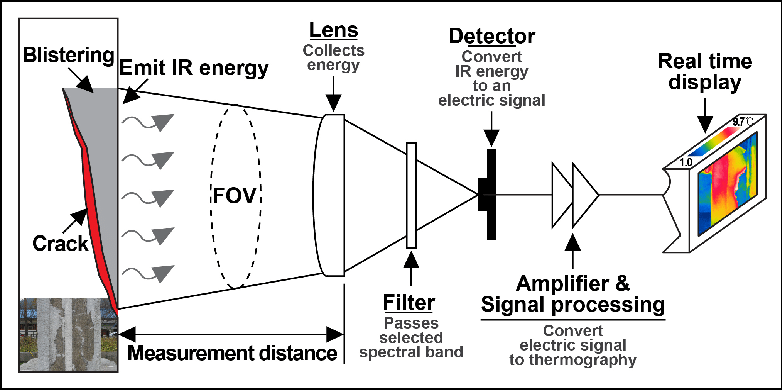

How Image Sensors Work

An image sensor is a semiconductor device that converts light into electrical signals, allowing digital cameras, smartphones, and other imaging systems to capture pictures and video. At its core, the sensor acts as the “eye” of the device, translating photons (light particles) into digital data that can be processed into an image.

The surface of an image sensor is covered with millions of tiny units called pixels. Each pixel contains a photodiode, a light-sensitive element that generates an electrical charge when struck by photons. The more light that hits a pixel, the stronger the charge it produces. This charge represents the brightness of that part of the image.

Since sensors are not naturally sensitive to color, they use a color filter array (CFA)—most commonly the Bayer filter—that places tiny red, green, or blue filters over each pixel. By combining information from adjacent pixels, the camera reconstructs full-color images.

There are two main types of image sensors:

- CCD (Charge-Coupled Device): Captures charges in each pixel and transfers them across the chip to a readout node, where they are converted into voltage. CCDs provide high-quality, low-noise images but consume more power.

- CMOS (Complementary Metal-Oxide-Semiconductor): Each pixel has its own amplifier and converts light to voltage directly at the pixel level. CMOS sensors are faster, cheaper, and more energy-efficient, making them the standard in modern devices.

After light is converted into electrical signals, additional circuits digitize the data, apply corrections, and process it into a final image. Combined with advanced algorithms and artificial intelligence, today’s sensors can deliver sharp, detailed, and vibrant photos, even in challenging lighting conditions.

In simple terms, image sensors are the crucial link that allows light from the real world to be transformed into the digital images we see every day.

Major Breakthroughs Because of Image Sensors

Image sensors have not only transformed photography but also reshaped industries, everyday life, and even space exploration. From digital cameras to autonomous vehicles, their ability to convert light into electronic signals has enabled countless innovations. Here are some of the most important breakthroughs made possible by image sensors.

The Digital Photography Revolution

Perhaps the most visible breakthrough brought by image sensors was the shift from film photography to digital photography. Before the invention of image sensors, capturing a photo required film rolls, darkroom development, and physical prints. This process was time-consuming, costly, and limited the number of photos a person could take.

The introduction of CCD sensors in 1969 and later CMOS sensors changed everything. These devices allowed light to be directly converted into digital data, eliminating the need for film. By the late 1990s and early 2000s, digital cameras had become mainstream, giving people the ability to take thousands of pictures, review them instantly, and store them electronically.

This revolution democratized photography. What was once an expensive hobby became accessible to anyone, laying the foundation for today’s culture of instant visual sharing.

The Rise of Smartphones and Social Media

A second breakthrough came when image sensors became small and efficient enough to fit inside smartphones. The launch of the iPhone in 2007 marked a turning point: a high-quality digital camera was now always in your pocket.

CMOS technology made this possible because it consumed less power and was cheaper to manufacture than CCDs. Over the years, smartphone sensors grew more advanced, offering higher resolution, better low-light performance, and computational photography features.

This directly fueled the growth of social media platforms like Instagram, Snapchat, and TikTok, where images and videos became the primary form of communication. Billions of photos are now uploaded daily, creating a new digital culture shaped entirely by image sensors.

Breakthroughs in Medical Imaging

Another major contribution of image sensors is in the field of medicine. They are used in a wide range of devices that help doctors see inside the human body without invasive surgery.

Endoscopes use tiny sensors to transmit real-time images from inside the digestive system, enabling early detection of diseases.

Capsule endoscopy allows patients to swallow a pill-sized camera that captures thousands of images as it moves through the gastrointestinal tract.

Digital X-rays and mammography use advanced sensors to provide clearer results with lower radiation exposure.

These medical imaging breakthroughs have saved countless lives by making diagnosis faster, safer, and more accurate.

Space Exploration and Astronomy

Image sensors have also been critical in expanding our understanding of the universe. When NASA launched the Hubble Space Telescope in 1990, it used CCD sensors to capture some of the most iconic images of space ever seen. From galaxies billions of light-years away to detailed views of planets in our solar system, these sensors provided data that transformed astronomy.

Later missions, such as the Mars Rovers and the James Webb Space Telescope, also rely on sophisticated image sensors to capture and analyze extraterrestrial environments. Without them, much of what we know about the cosmos would remain invisible.

Autonomous Vehicles and AI Vision

The development of autonomous vehicles is another area where image sensors play a central role. Self-driving cars rely on multiple sensors—lidar, radar, and especially cameras—to “see” their surroundings.

Modern CMOS sensors provide high-resolution, real-time images that are processed by artificial intelligence (AI) algorithms. These systems detect lane markings, traffic signs, pedestrians, and other vehicles, enabling safe navigation.

Beyond cars, image sensors are also used in drones, robotics, and industrial automation, where machine vision allows machines to perform tasks such as object recognition, quality inspection, and navigation.

Security and Surveillance

Security has been profoundly changed by image sensors. The rise of CCTV cameras, body-worn police cameras, and facial recognition systems has provided governments, businesses, and individuals with powerful tools for safety and monitoring.

For example, airports use advanced sensors for biometric security checks, while cities deploy them in smart surveillance systems to improve public safety. Although this raises debates about privacy, there is no doubt that image sensors have revolutionized security worldwide.

Everyday Applications and Beyond

Outside these major breakthroughs, image sensors quietly power many aspects of daily life:

Barcode scanners in supermarkets.

Video conferencing for remote work.

AR and VR devices for immersive experiences.

Smart home devices, like video doorbells and AI assistants with vision.

Their versatility shows how deeply integrated image sensors are in modern society.